The Evolving Landscape of MLOps: Streamlining Machine Learning Pipelines in 2024

Machine learning (ML) has become a transformative force across industries, but its true potential can only be unlocked through effective deployment and management. This is where MLOps, the practice of merging machine learning with operations, comes into play. In 2024, MLOps continues to evolve, offering organizations a robust and efficient framework for building, deploying, and maintaining production-ready ML models.

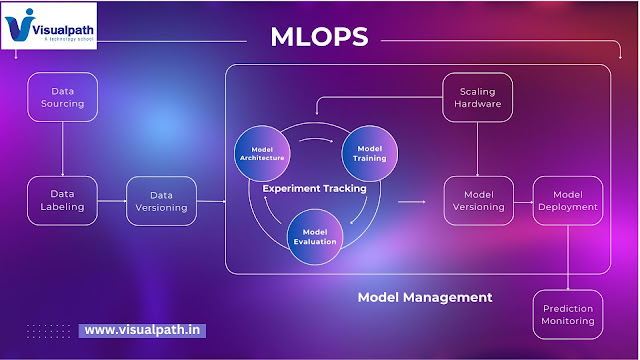

The Need for MLOpsThe journey of an ML model, from conception to real-world impact, is complex. Traditional software development methodologies often fall short when dealing with the iterative nature of ML. Data scientists grapple with version control, experiment tracking, and ensuring data quality throughout the model lifecycle. Additionally, deploying and monitoring models in production requires expertise in infrastructure management and performance optimization. MLOps bridges this gap by establishing a standardized workflow that streamlines the entire ML pipeline.

Key MLOps Components in 2024

The MLOps landscape boasts a rich ecosystem of tools and technologies. Here's a breakdown of some key components that shape the MLOps landscape in 2024:

- Version Control and Experiment Tracking: Tools like Git and MLflow enable seamless version control of code, data, and models. Experiment tracking solutions allow data scientists to capture and compare different training runs, facilitating reproducibility and knowledge sharing. MLOps Online Training

- Data Management: MLOps emphasizes robust data management practices. Feature stores act as centralized repositories for model features, ensuring consistency and simplifying feature engineering. Data versioning ensures that models are trained on consistent datasets, preventing unexpected performance degradation. Data quality monitoring tools proactively identify and address data issues that could negatively impact model performance.

- Model Training and Serving Infrastructure: Containerization technologies like Docker and Kubernetes package models and their dependencies, enabling efficient deployment across diverse infrastructure environments. Serverless computing is gaining traction in MLOps, offering a cost-effective and scalable solution for model serving. Additionally, the emergence of GPU cloud servers and serverless GPUs provides access to powerful compute resources for training and inference.

- Model Deployment and Management: MLOps platforms facilitate automated model deployment, eliminating manual configuration and streamlining the transition from development to production. Model registries act as central repositories for storing, managing, and versioning models, enabling easy retrieval and governance. MLOps Training in Hyderabad

- Model Monitoring and Observability: Once deployed, models require continuous monitoring to ensure they perform as expected. MLOps tools provide real-time insights into model behavior, including drift detection, fairness metrics, and explainability analysis. This allows data scientists and engineers to proactively identify and address performance issues.

The Rise of Open-Source and Closed-Source Solutions

The MLOps landscape thrives on a healthy mix of open-source and closed-source solutions. Open-source tools, like Kubeflow and MLflow, offer flexibility and a vibrant community for support. However, they often require more customization and integration effort. Closed-source platforms, on the other hand, provide pre-built workflows, enterprise-grade features, and dedicated support, making them suitable for organizations seeking a more streamlined experience.

The Evolving Role of MLOps Engineers

The growing adoption of MLOps has led to the rise of a specialized role: the MLOps engineer. These individuals bridge the gap between data science and operations, possessing expertise in both ML fundamentals and software engineering principles. Their skillset encompasses containerization technologies, version control systems, and familiarity with MLOps tools. MLOps Course in Hyderabad

MLOps in the Age of Responsible AI

As the ethical implications of AI become increasingly prominent, MLOps plays a crucial role in promoting Responsible AI practices. By integrating fairness checks, bias detection tools, and explainability frameworks into the ML pipeline, MLOps helps ensure that deployed models are fair, unbiased, and transparent.

The Future of MLOps

Looking ahead, MLOps will continue to evolve, driven by advancements in automation, infrastructure management, and interpretability tools. The rise of AutoML and AutoOps holds promise for further streamlining the ML lifecycle, while advancements in explainability techniques will enable better understanding of model decision-making. Additionally, the integration of MLOps with security frameworks will be crucial for securing sensitive data and models in production environments. MLOps Training Institute in Hyderabad

Conclusion

MLOps is no longer an optional add-on but a vital component for organizations that want to unlock the full potential of machine learning. By embracing a robust MLOps strategy, businesses can streamline ML development, ensure model quality, and deliver real-world value with confidence. As the MLOps landscape continues to evolve in 2024 and beyond, organizations that embrace these practices will be well-positioned to leverage the power of AI for sustainable competitive advantage. Machine Learning Operations Training

The Best Software Online Training Institute in Ameerpet, Hyderabad. Avail complete Machine Learning Operations Training by simply enrolling in our institute, Hyderabad. You will get the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Visit: https://www.visualpath.in/mlops-online-training-course.html

Visit Blog: https://mlopstraining.blogspot.com/

Comments

Post a Comment